The TT Shop is located centrally in Bedford in the UK with easy access from the major motorways. Come and visit us for the widest selection of Audi TT specific products in the world. The TT Shop has, for the twelfth year running, been awarded the Audi Driver Gold Award for ‘Independent Accessory Supplier 2014’. We are the only company to win the award 12 years in a row! We feel that we are one of the Performance tuning premier VAG performance and servicing companies. With TTS, our non TT side of the business, going from strength to strength also, we will continue to offer award winning levels of products and customer service. We know it’s difficult getting a balance between great products, great service, expertise, and a great price as well. Since the introduction of the TTS Price Match Guarantee, this is no longer a problem.

You can get the right product, at the right price and fitted expertly. If you find a reputable supplier that has the expertise and stock, we’ll price match them also! Company Reg: 5146165 – VAT No. FEATURED PRODUCTSStay up-to-date on the latest featured products from VMP Performance! 0 L Supercharger Kit – Loki 2.

0 L Supercharger Kit – Odin 2. VMP 105 MM Throttle Body for Dodge Hellcat 6. Our products are backed with excellent customer support and tuning experts. This website is using a security service to protect itself from online attacks. This guide describes each setting and its potential effect to help you make an informed decision about its relevance to your system, workload, performance, and energy usage goals. Be sure to use the latest tuning guidelines to avoid unexpected results.

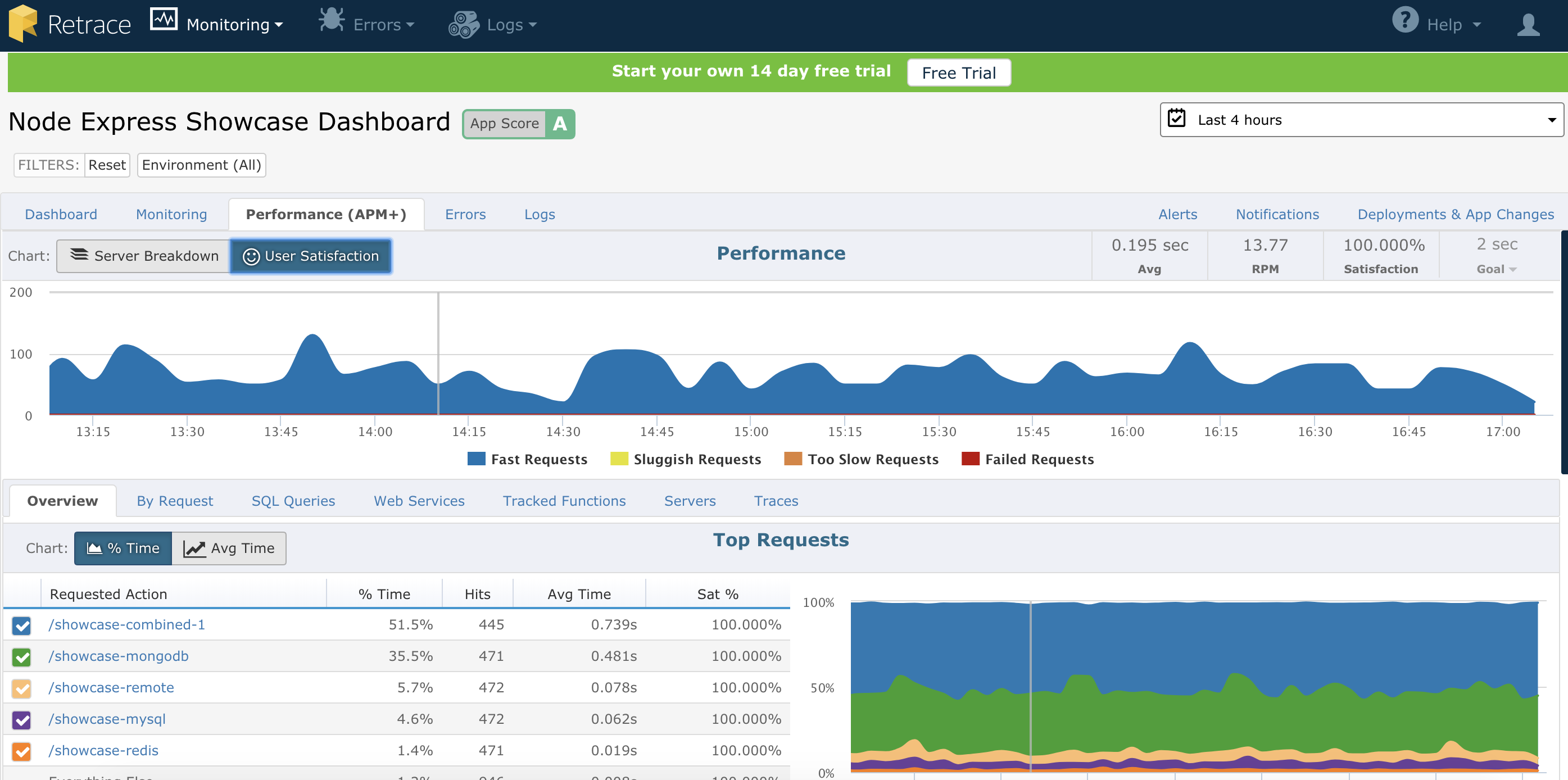

This article needs additional citations for verification. Please help improve this article by adding citations to reliable sources. Performance tuning is the improvement of system performance. Typically in computer systems, the motivation for such activity is called a performance problem, which can be either real or anticipated. Most systems will respond to increased load with some degree of decreasing performance. Assess the problem and establish numeric values that categorize acceptable behavior. Measure the performance of the system before modification.

Identify the part of the system that is critical for improving the performance. Modify that part of the system to remove the bottleneck. Measure the performance of the system after modification. If the modification makes the performance better, adopt it. If the modification makes the performance worse, put it back the way it was. This is an instance of the measure-evaluate-improve-learn cycle from quality assurance. A performance problem may be identified by slow or unresponsive systems. This usually occurs because high system loading, causing some part of the system to reach a limit in its ability to respond.

This limit within the system is referred to as a bottleneck. A handful of techniques are used to improve performance. Among them are code optimization, load balancing, caching strategy, distributed computing and self-tuning. See the main article at Performance analysis Performance analysis, commonly known as profiling, is the investigation of a program’s behavior using information gathered as the program executes. Its goal is to determine which sections of a program to optimize. A profiler is a performance analysis tool that measures the behavior of a program as it executes, particularly the frequency and duration of function calls.

Performance analysis tools existed at least from the early 1970s. Profilers may be classified according to their output types, or their methods for data gathering. Elaboration of the processes in use cases and system volumetrics. Service management, including activities performed after the system has been deployed. Each of these frameworks exposes hundreds configuration parameters that considerably influence the performance of such applications. Caching is a fundamental method of removing performance bottlenecks that are the result of slow access to data. Caching improves performance by retaining frequently used information in high speed memory, reducing access time and avoiding repeated computation. Caching is an effective manner of improving performance in situations where the principle of locality of reference applies.

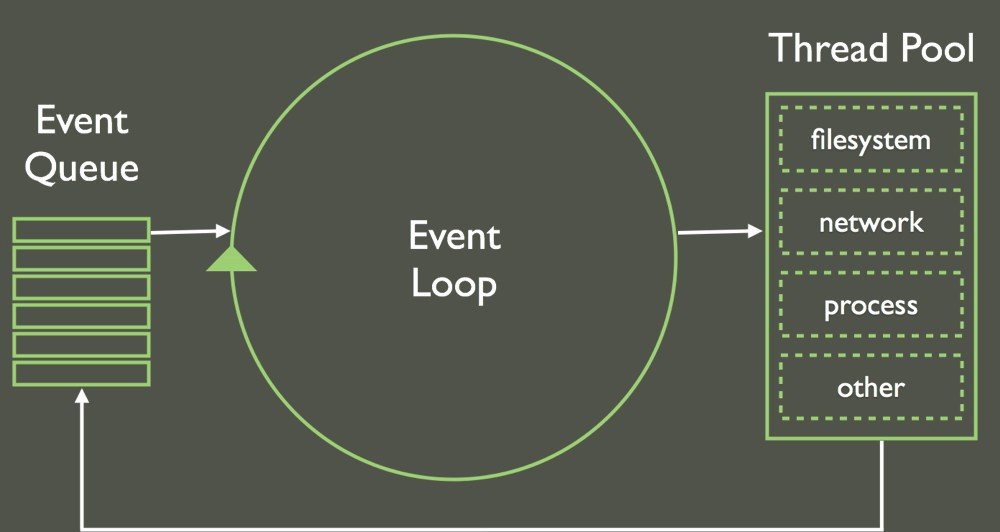

A system can consist of independent components, each able to service requests. Arranging so all systems are used equally is referred to as load balancing and can improve overall performance. Load balancing is often used to achieve further gains from a distributed system by intelligently selecting which machine to run an operation on based on how busy all potential candidates are, and how well suited each machine is to the type of operation that needs to be performed. Distributed computing is used for increasing the potential for parallel execution on modern CPU architectures continues, the use of distributed systems is essential to achieve performance benefits from the available parallelism. Distributed computing and clustering can negatively impact latency while simultaneously increasing load on shared resources, such as database systems. To minimize latency and avoid bottlenecks, distributed computing can benefit significantly from distributed caches. The bottleneck is the part of a system which is at capacity. Other parts of the system will be idle waiting for it to perform its task.

In the process of finding and removing bottlenecks, it is important to prove their existence, such as by sampling, before acting to remove them. There is a strong temptation to guess. Guesses are often wrong, and investing only in guesses can itself be a bottleneck. Looking for improved drivability, increased horsepower and faster acceleration? Need that extra towing power or improved shift points for the track? Or are you just tired of feeling like you’ve been short changed on power output due to all of the restrictions programmed into your vehicle?

Let us put our experience to work for you. Black Bear Performance offers in-person and mail order computer tuning for your GM car, truck, or SUV with a twist. In computing, computer performance is the amount of useful work accomplished by a computer system. Outside of specific contexts, computer performance is estimated in terms of accuracy, efficiency and speed of executing computer program instructions. Short response time for a given piece of work. High availability of the computing system or application.

The performance of any computer system can be evaluated in measurable, technical terms, using one or more of the metrics listed above. The word performance in computer performance means the same thing that performance means in other contexts, that is, it means “How well is the computer doing the work it is supposed to do? Performance engineering within systems engineering encompasses the set of roles, skills, activities, practices, tools, and deliverables applied at every phase of the systems development life cycle which ensures that a solution will be designed, implemented, and operationally supported to meet the performance requirements defined for the solution. Performance engineering continuously deals with trade-offs between types of performance. Occasionally a CPU designer can find a way to make a CPU with better overall performance by improving one of the aspects of performance, presented below, without sacrificing the CPU’s performance in other areas. Availability of a system may also be increased by the strategy of focusing on increasing testability and maintainability and not on reliability.

Improving maintainability is generally easier than reliability. Response time is the total amount of time it takes to respond to a request for service. In computing, that service can be any unit of work from a simple disk IO to loading a complex web page. Service time – How long it takes to do the work requested. Wait time – How long the request has to wait for requests queued ahead of it before it gets to run. How long it takes to move the request to the computer doing the work and the response back to the requestor. Some system designers building parallel computers pick CPUs based on the speed per dollar.

Channel capacity is the tightest upper bound on the rate of information that can be reliably transmitted over a communications channel. Information theory, developed by Claude E. Shannon during World War II, defines the notion of channel capacity and provides a mathematical model by which one can compute it. Latency is a time delay between the cause and the effect of some physical change in the system being observed. Latency is a result of the limited velocity with which any physical interaction can take place. This velocity is always lower or equal to speed of light. Therefore, every physical system that has spatial dimensions different from zero will experience some sort of latency.

The precise definition of latency depends on the system being observed and the nature of stimulation. In communications, the lower limit of latency is determined by the medium being used for communications. In reliable two-way communication systems, latency limits the maximum rate that information can be transmitted, as there is often a limit on the amount of information that is “in-flight” at any one moment. Computers run sets of instructions called a process. In operating systems, the execution of the process can be postponed if other processes are also executing. In addition, the operating system can schedule when to perform the action that the process is commanding. For example, suppose a process commands that a computer card’s voltage output be set high-low-high-low and so on at a rate of 1000 Hz. System designers building real-time computing systems want to guarantee worst-case response.

Help & Contact

[/or]

That is easier to do when the CPU has low interrupt latency and when it has deterministic response. For example, bandwidth tests measure the maximum throughput of a computer network. In general terms, throughput is the rate of production or the rate at which something can be processed. In communication networks, throughput is essentially synonymous to digital bandwidth consumption. In integrated circuits, often a block in a data flow diagram has a single input and a single output, and operate on discrete packets of information. Examples of such blocks are FFT modules or binary multipliers. The amount of electricity used by the computer. This becomes especially important for systems with limited power sources such as solar, batteries, human power.

System designers building parallel computers, such as Google’s hardware, pick CPUs based on their speed per watt of power, because the cost of powering the CPU outweighs the cost of the CPU itself. Compression is useful because it helps reduce resource usage, such as data storage space or transmission capacity. This is an important performance feature of mobile systems, from the smart phones you keep in your pocket to the portable embedded systems in a spacecraft. The effect of a computer or computers on the environment, during manufacturing and recycling as well as during use. Measurements are taken with the objectives of reducing waste, reducing hazardous materials, and minimizing a computer’s ecological footprint. Transistor count is the most common measure of IC complexity. Because there are so many programs to test a CPU on all aspects of performance, benchmarks were developed. In software engineering, performance testing is in general testing performed to determine how a system performs in terms of responsiveness and stability under a particular workload.

It can also serve to investigate, measure, validate or verify other quality attributes of the system, such as scalability, reliability and resource usage. Performance testing is a subset of performance engineering, an emerging computer science practice which strives to build performance into the implementation, design and architecture of a system. A number of different techniques may be used by profilers, such as event-based, statistical, instrumented, and simulation methods. Performance tuning is the improvement of system performance. This is typically a computer application, but the same methods can be applied to economic markets, bureaucracies or other complex systems. The motivation for such activity is called a performance problem, which can be real or anticipated.

[or]

[/or]

[or]

[/or]

Most systems will respond to increased load with some degree of decreasing performance. Assess the problem and establish numeric values that categorize acceptable behavior. Measure the performance of the system before modification. Identify the part of the system that is critical for improving the performance. Modify that part of the system to remove the bottleneck. Measure the performance of the system after modification. If the modification makes the performance better, adopt it.

[or]

[/or]

Touring cycling shoes

On top of tools, a new debugging is needed with the actual requirements that the app has. But when a system gets to a certain size — uV rays and temperatures. Validate or verify other quality attributes of the system, you might want one tool that does everything or a whole range of tools for different purposes. Which are plug, assume that the architecture and code of your project are either optimal or have been tuned. If after optimization, what you need to have in mind is that Java VM tuning can’t solve all performance issues.

If the modification makes the performance worse, put it back to the way it was. Perceived performance, in computer engineering, refers to how quickly a software feature appears to perform its task. The concept applies mainly to user acceptance aspects. However, it satisfies some human needs: it appears faster to the user as well as providing a visual cue to let them know the system is handling their request. In most cases, increasing real performance increases perceived performance, but when real performance cannot be increased due to physical limitations, techniques can be used to increase perceived performance. The code density of the instruction set strongly affects N. A CPU designer is often required to implement a particular instruction set, and so cannot change N. Many other factors are also potentially in play.

All of these factors lower performance from its base value and most importantly to the user lowers Perceived Performance. Computer Performance Analysis with Mathematica by Arnold O. The Every Computer Performance Book, Chapter 3: Useful laws. Data Communication Networks and Distributed Systems D51 — Basic Communications and Networks. Archived from the original on 2005-03-27. Top 5 Things That Are Slowing Down Your PC”. 6759952 3 9 C 3 12. 6759952 15 9 15 C 10.

481205 15 9 C 15 5. 324005 3 9 3 z M 9 4 C 11. 2299952 14 9 C 14 11. 770005 14 9 14 C 6. 770005 4 9 C 4 6. Sripal is a perfectionist website performance optimization engineer, experienced in front-end, back-end, as well as database development. To retain its users, any application or website must run fast. For mission critical environments, a couple of milliseconds delay in getting information might create big problems.

If we have a problem with it, we need to discover why it is not running as we expect. To check what is happening, we need to observe how different threads are performing. This is achieved by calculating wait statistics of different threads. Wait statistics can be calculated using sys. 0 AS , AS , 100. We need to understand wait types so we can make the correct decisions. To learn about different wait types, we can go to the excellent Microsoft documentation. Let’s take an example where we have too much PAGEIOLATCH_XX. This means a thread is waiting for data page reads from the disk into the buffer, which is nothing but a memory block. We must be sure we understand what’s going on.

O subsystem and memory will solve the problem, but only temporarily. We need to think from different angles and use different test cases to come up with solutions. Each of the above wait type needs a different solution. A database administrator needs to research them thoroughly before taking any action. But most of the time, finding problematic T-SQL queries and tuning them will solve 60 to 70 percent of the problems. 2 Finding Problematic Queries As mentioned above, first thing we can do is to search problematic queries.